Apple announced the M4 chip, a powerful new upgrade that will arrive in next-generation iPad (and, further down the line, the best Macbooks and Macs). You can check out our beat-by-beat coverage of the Apple event, but one element of the presentation has left some users confused: what exactly does TOPS mean?

TOPS is an acronym for ‘trillion operations per second’, and is essentially a hardware-specific measure of AI capabilities. More TOPS means faster on-chip AI performance, in this case the Neural Engine found on the Apple M4 chip.

The M4 chip is capable of 38 TOPS – that’s 38,000,000,000,000 operations per second. If that sounds like a staggeringly massive number, well, it is! Modern neural processing units (NPUs) like Apple’s Neural Engine are advancing at an incredibly rapid rate; for example, Apple’s own A16 Bionic chip, which debuted in the iPhone 14 Pro less than two years ago, offered 17 TOPS.

Apple’s new chip isn’t even the most powerful AI chip about to hit the market – Qualcomm’s upcoming Snapdragon X Elite purportedly offers 45 TOPS, and is expected to land in Windows laptops later this year.

How is TOPS calculated?

The processes by which we measure AI performance are still in relative infancy, but TOPS provides a useful and user-accessible metric for discerning how ‘good’ at handling AI tools a given processor is.

I’m about to get technical, so if you don’t care about the mathematics, feel free to skip ahead to the next section! The current industry standard for calculating TOPS is TOPS = 2 × MAC unit count × Frequency / 1 trillion. ‘MAC’ stands for multiply-accumulate; a MAC operation is basically a pair of calculations (a multiplication and an addition) that are run by each MAC unit on the processor once every clock cycle, powering the formulas that make AI models function. Every NPU has a set number of MAC units determined by the NPU’s microarchitecture.

‘Frequency’ here is defined by the clock speed of the processor in question – specifically, how many cycles it can process per second. It’s a common metric also used in CPUs, GPUs, and other components, essentially denoting how ‘fast’ the component is.

So, to calculate how many operations per second an NPU can handle, we simply multiply the MAC unit count by 2 for our number of operations, then multiply that by the frequency. This gives us an ‘OPS’ figure, which we then divide by a trillion to make it a bit more palatable (and kinder on your zero key when typing it out).

Simply put, more TOPS means better, faster AI performance.

Why is TOPS important?

TOPS is, in the simplest possible terms, our current best way to judge the performance of a device for running local AI workloads. This applies both to the industry and the wider public; it’s a straightforward number that lets professionals and consumers immediately compare the baseline AI performance of different devices.

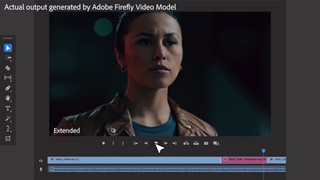

TOPS is only applicable for on-device AI, meaning that cloud-based AI tools (like the internet’s favorite AI bot, ChatGPT) don’t typically benefit from better TOPS. However, local AI is becoming more and more prevalent, with popular professional software like the Adobe Creative Cloud suite starting to implement more AI-powered features that depend on the capabilities of your device.

It should be noted that TOPS is by no means a perfect metric. At the end of the day, it’s a theoretical figure derived from hardware statistics and can differ greatly from real-world performance. Factors such as power availability, thermal systems, and overclocking can impact the actual speed at which an NPU can run AI workloads.

To that end, though, we’re now starting to see AI benchmarks crop up, such as Procyon AI from UL Benchmarks (makers of the popular 3DMark and PCMark benchmarking programs). These can provide a much more realistic idea of how well a You can expect to see TechRadar running AI performance tests as part of our review benchmarking in the near future!